Linguistics: now with magnets, gamma rays, and lego bricks!

Following up on the previous post, something I think about from time to time is how to translate what I do in my research to a non-linguistic audience. Not because I have an uncontrollable missionary urge or because I feel scientific research cannot have a place in our society unless it is "useful" in some concrete—usually economic—sense, but mostly because I feel people are missing out on all the incredibly cool stuff that we find in natural language. Part of this is arguably also 'hard science envy': what do people think of when you say you're going to show them a cool science experiment? They think of a roll of Mentos going down a Coke bottle, or the Crazy Russian Hacker making a bubble making machine out of soap and dry ice. Whatever it is, I can guarantee you no one is thinking of a linguist, standing in the corner of the room, saying something like: "Hey guys, you know what's really cool about this sentence?"

My physics envy was aggravated recently as I was watching De Schuur van Scheire, a show on Belgian national television, in which a group of nerds has fun trying out all kinds of tricks, experiments, and hacks. Not surprisingly—especially considering the physics background of the host of the show—all these hacks have a strong natural science or engineering bias. Recent examples include a home-made battery, a bike with a pulse jet engine, and Ikea-hacking. The social sciences are completely absent in this show. Understandably so, right? I mean, who needs a bunch of boring men and women reading boring books and writing even more boring papers about those books? That doesn't make for exciting science experiments (let alone exciting television). Well, I beg to differ: linguistics can be every bit as cool, nerdy, and exciting as the hard sciences. As an illustration, I give you: linguistic magnets, gamma rays, and lego bricks!

magnets

Magnets, Wikipedia informs us, are materials or objects that produces a magnetic field. This field is invisible but it pulls on some materials, such as iron, and repels others, such as other magnets. Exciting, right? Invisible stuff attracting or repelling other stuff! I bet you never find that in natural language. Wrong. Allow me to set up two magnets (a positive and a negative one if you will):

- John is helping.

- John isn't helping.

There, that was easy. Now let's throw some material into the invisible field created by these magnets. I'm going to start with the word anyone. It is attracted by the negative magnet but repelled by the positive one:

- *John is helping anyone.

- John isn't helping anyone.

See how that first example went wrong? The positive magnetic field created by the sentence is repelling anyone so strongly that it wants to push it right out of the sentence. Now let's try the opposite. We're going throw in pretty well. This expression has the opposite magnetic properties of anyone: it is attracted by positive magnets, but repelled by negative ones:

- John is helping pretty well.

- *John isn't helping pretty well.

Now let's see what happens if we start stacking magnets. If I take the positive magnetic field trying to repell anyone in example 3 and put a negative magnetic field on top of it, the attraction between anyone and the negative force is stronger than the repulsion from the positive force:

- I don't think John is helping anyone.

But if we try the opposite, i.e. if we embed the repulsion of pretty well in example 6 in a larger positive magnetic field, it still goes wrong:

- *I think John isn't helping pretty well.

Believe me when I say that this is just the tip of the magnetic linguistic iceberg. We have no time to linger, though, as we have gamma rays to get to!

gamma rays

Gamma rays—thank goodness for Wikipedia—are a type of electromagnetic radiation of an extremely high frequency. They are basically high energy photons. As you might have guessed from my repeated use of the adjective high in the preceding sentences, gamma rays are pretty dangerous and pretty powerful. Just to give you an idea: if we install a gamma ray cannon—if such a thing exists—on one side of the room and put a plywood door in its line of fire, the gamma rays would have no trouble reaching the other side of the room, straight through the door. Same story if you put two plywood doors in between. Or three. Or ten. Or a hundred. In fact, if you want to stop the gamma rays, you're going to need an inches thick slab of lead.

Gamma ray cannons may or may not exist in real life, but they certainly do in natural language. We call them questions. The gamma rays that shoot out of them are called question words. Typical examples are who, what, where, etc. Let's warm up our cannon with a simple shot:

- What is John eating?

Note how the question word what does not appear to the immediate right of the verb eating, where we typically find the foods in this sentence (cf. John is eating an apple). Instead, it has an uncontrollable urge to move all the way to the left, like, well, a gamma ray shot out of a gamma ray cannon. Now let's put a plywood door in the line of fire of this cannon:

- What does Mary say that John is eating?

Sentence boundaries are the plywood doors of language. Note that the question word what in this example still refers to the food that John is eating. This means that it started out to the immediate right of the verb eating, but from there it has shot leftward uncontrollably. In particular, it has blasted through the sentence boundary marked by that without even the slightest hesitation. So let's give our cannon a bit more of a challenge: we're going to put two plywood doors in the line of fire:

- What does Sally think that Mary says that John is eating?

Boom! Straight through, as if the doors simply aren't there. Clearly we need a stronger boundary. Let's try putting five plywood doors blocking our gamma ray cannon:

- What do you think that Paul claims that Ellen believes that Sally thinks that Mary says that John is eating?

Like actual gamma rays, our question word keeps shooting through sentence boundaries as if they're simply not there. In order to stop these rays, we need to find something denser. We need to find the linguistic equivalent of a thick slab of lead. Turns out that a particular type of clause boundary is made of lead. Imagine John was eating something at the same time that Mary was off to the store, and you would like to know what that was. You load up your gamma ray cannon, fire off your question word, and BAM! it hits a lead wall:

- *What is Mary off to the store while John is eating?

So there you go: that is made of plywood, while while is pure lead. Next time you need to shield yourself from gamma rays, choose your complementizers wisely!

lego bricks

Lego bricks are the ultimate nerd toys: emminently hackable, limited only by one's own imagination, and a wide gamut of Star Wars merchandising. If only there were a way of having them with us all the time. Well, look no further: the very words you speak are nature's best lego bricks. Consider for example the following (not very uplifting but perfectly grammatical) sentence:

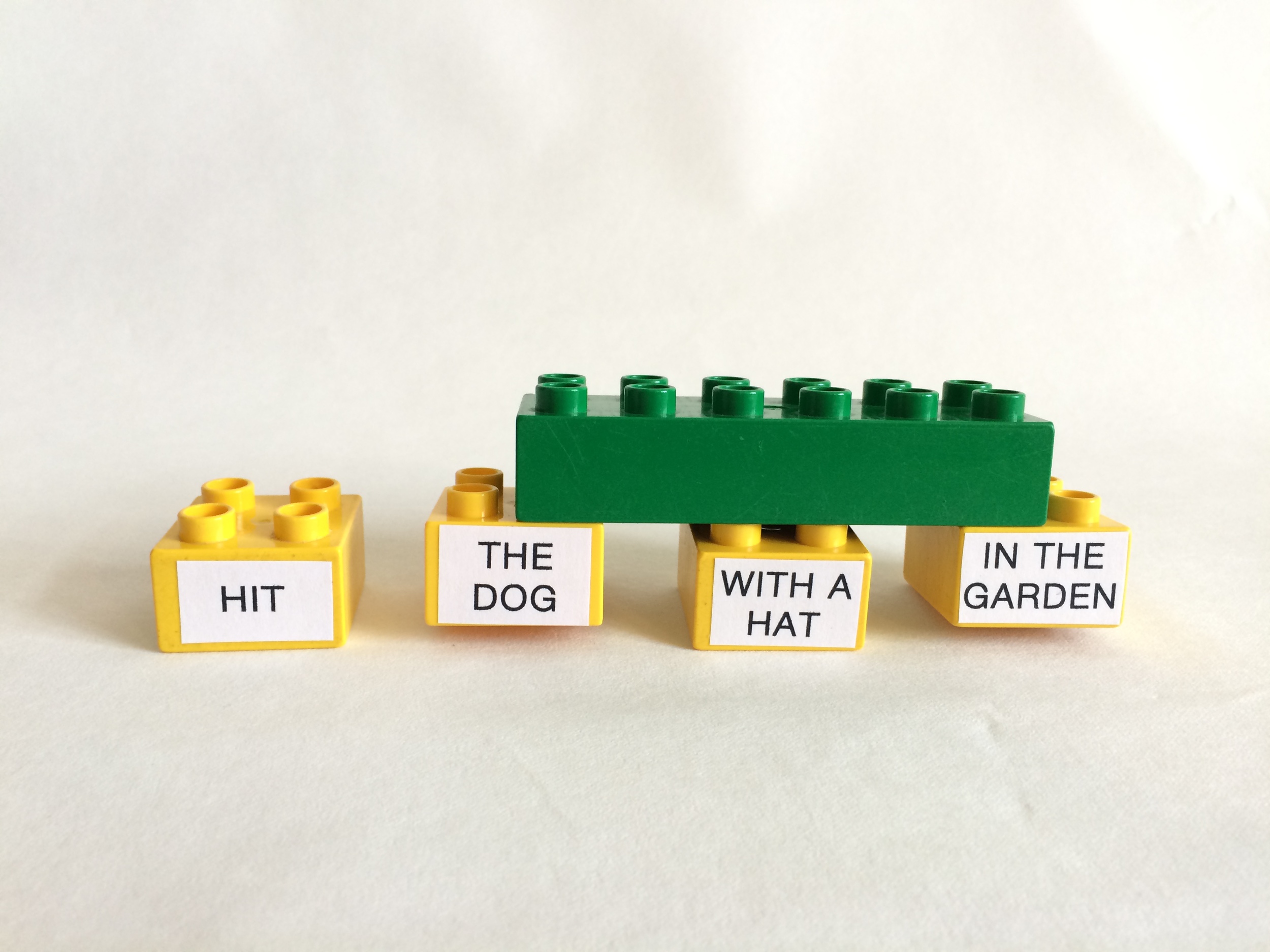

- John hit the dog with a hat in the garden.

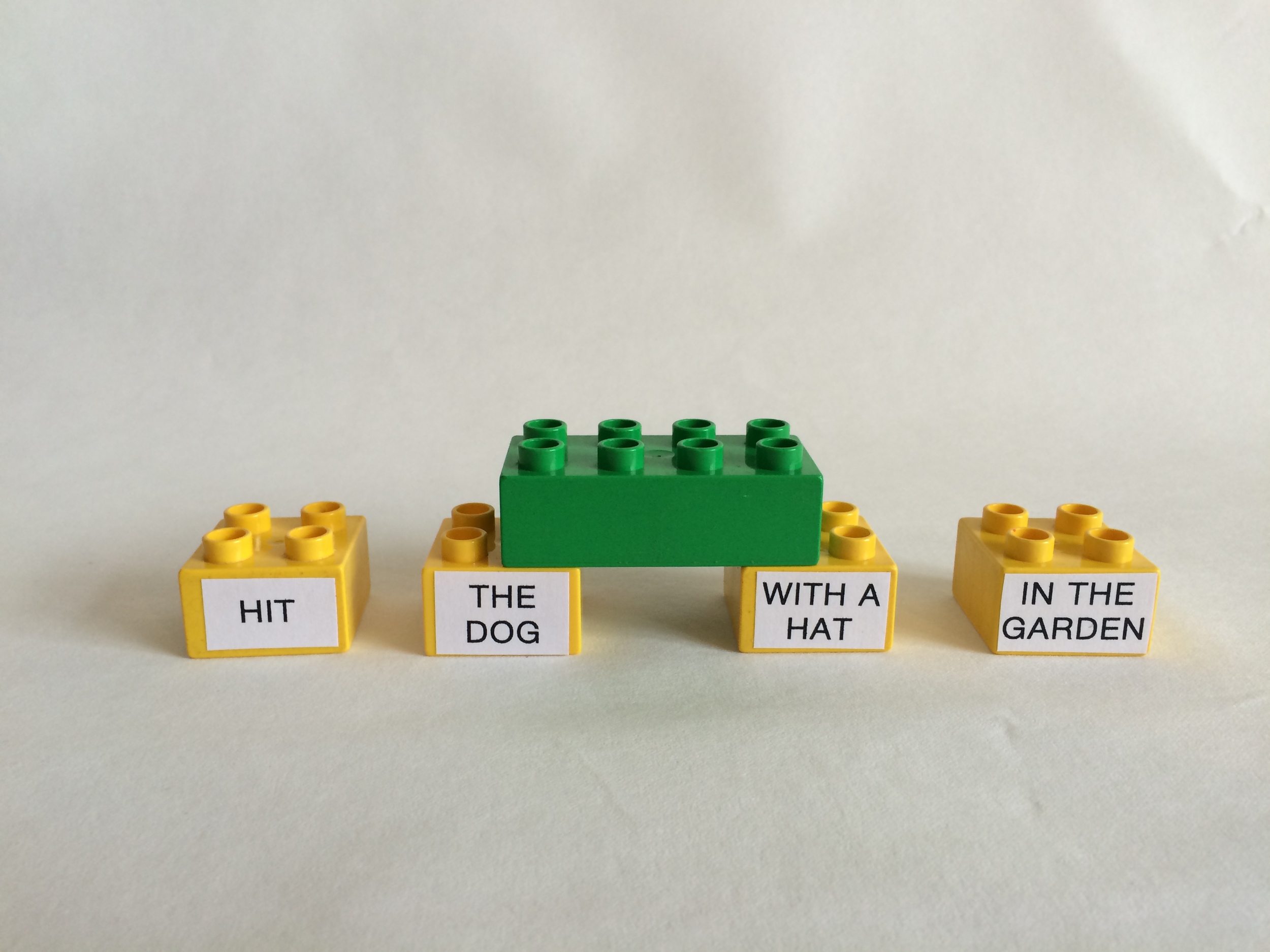

Let's forget about John for the moment; he won't have any role to play in the remainder of the discussion. The rest of the sentence is best represented as follows:

What can you do with bricks like these? You can start building bigger structures of course. Well, here's a kicker for you: the towers you can build based on these four bricks correspond exactly to the different interpretations of the example in 1. Let's see what I mean by doing some actual building. Suppose we start by connecting the first two bricks:

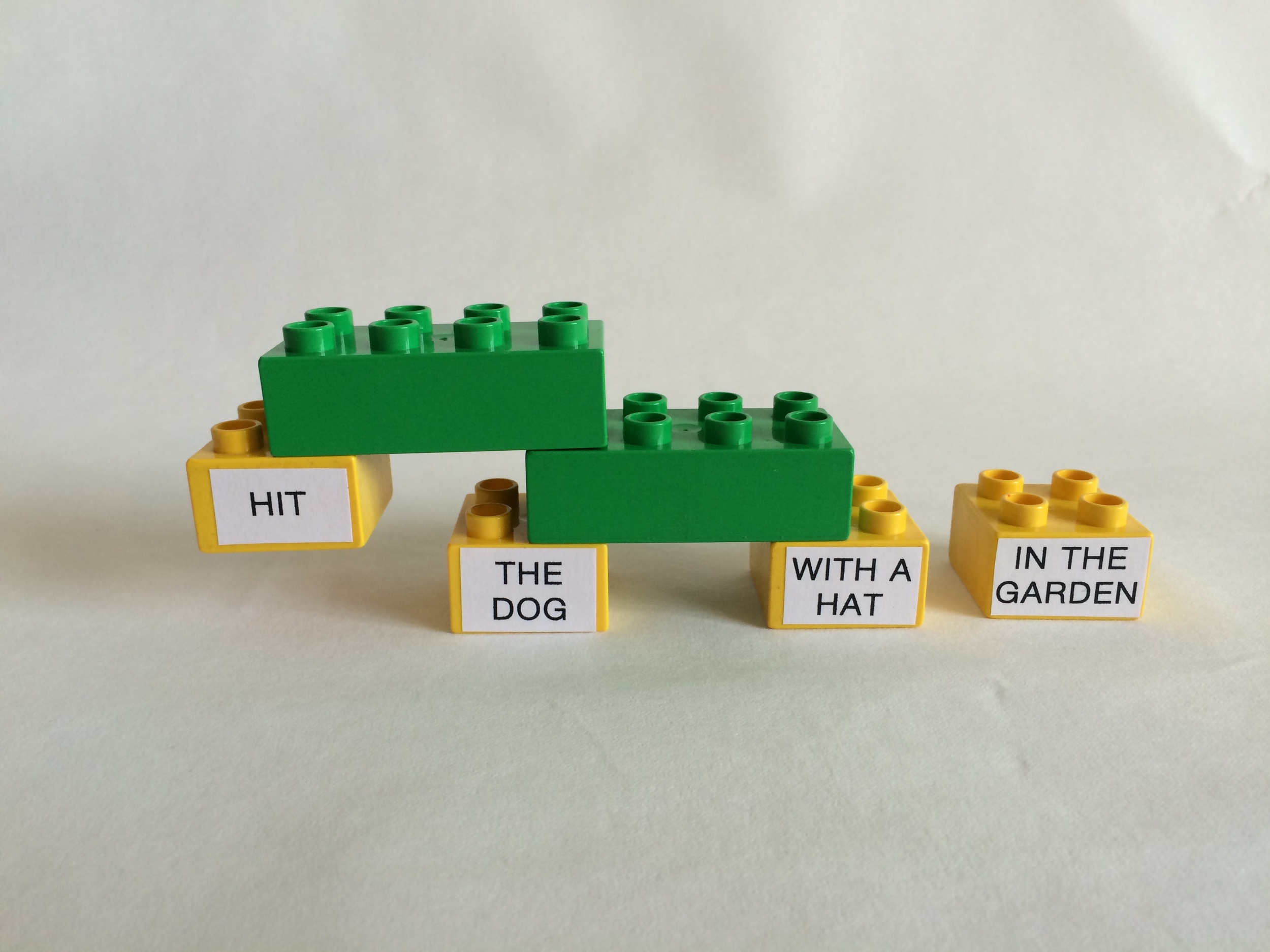

We've now created a little tower that contains both hit and the dog, i.e. we're saying that part of the interpretation of this sentence involves a dog getting hit (which seems pretty accurate). Let's continue building:

The second green block has joined hit the dog and with a hat, i.e. we're now saying that the dog-hitting was performed using a hat. Time for the final step in our tower:

The final brick combines hit the dog with a hat with in the garden. This gives us a nice four-stage tower—though one that is leaning heavily to the right—and it also completes the interpretation of the sentence: a dog was being hit using a hat, and the hitting took place in the garden. However, just like this is by no means the only way we can put these four yellow bricks together, it is also by no means the only interpretation this sentence can have. Suppose we had started off as follows:

This time the first green brick combines the dog with with a hat. What does this mean? It means we're not talking about any old dog. No sir, we're talking about this feisty specimen:

Combining the second and third yellow brick tells us that we are dealing with a dog who's wearing a hat. If we now want to express that it is this unfortunate creature that's being hit, we have to join the first brick to our tower:

The final step is the same as in our first tower; the fourth yellow brick is added to the structure:

Our second tower is done, as is our second interpretation of this sentence: this time, the creature that's being hit is a dog wearing a hat. The sentence provides no information as to the instrument of the hitting (could be a hat, could be a baseball bat, could be anything), but it does tell us the hitting took place in the garden.

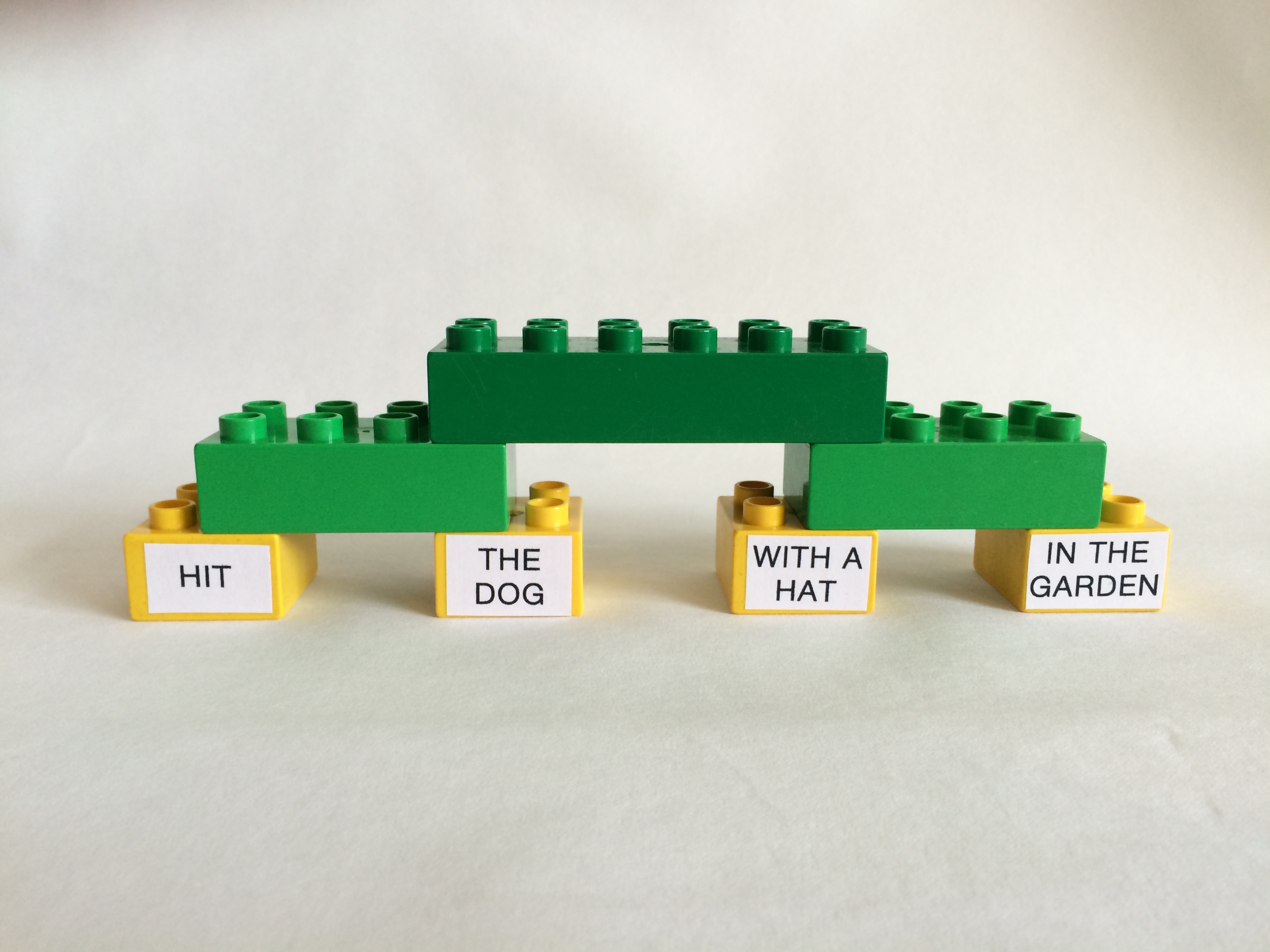

Once again, though, we have exhausted neither the combinatorial possibilities of our lego bricks, nor the possible interpretations of this sentence. Suppose we had combined our hat-wearing dog not with hit, but with in the garden:

What does this mean? It means we're not focusing on just any old hat-wearing dog. It turns out John has hat-wearing dogs all over his house: he has one in the kitchen, in the basement, in the bathroom, and he also has one in the garden. And today, he's decided to exercise his animal cruelty on that latter dog:

Behold tower number three and, like its shadow, interpretation number three right behind it. What this says is that the dog that is being hit is the hat-wearing specimen that resides in the garden. Under this interpretation, the sentence provides no information about the instrument used to perform the hitting or the location where the hitting took place.

Three different interpretations for that one (simple) example in 1. Surely we've exhausted this sentence now, right? Well, have we exhausted our tower building options? Clearly not:

Now we start by combining the last two bricks, and once again, we need to ask ourselves: what is the linguistic correlate of this real-world building activity? We're combining with a hat and in the garden. This means that we're not talking about any old hat, we're talking about a garden hat. You see, John is not only cruel to his dogs, he also gets bored easily. He doesn't like having to use the same hat every time he wants to hit a dog, and so he has hats lying all over his house: in the kitchen, the bathroom, the basement, and, yes, the garden. And today he his hitting the dog...

...using his garden hat:

Tower number four (the most stable one so far) and interpretation number four. Note, though, that John is not the only one can enjoy variety in his hat selection. Suppose we give the garden hat to the dog:

Behold a dog who enjoys the finer things in life. He enjoys wearing hats, yes, but it's not like he'll put just any old headgear on his canine head. This dog only wears garden hats. Unfortunately for him, though, this is not his lucky day:

Our poor fashion-sensitive friend is getting hit. We don't know with what or where, but we do know that he is the recipient of a beating. More generally, we have arrived at our fifth and final tower, as well as our fifth and final interpretation of the example in 1.

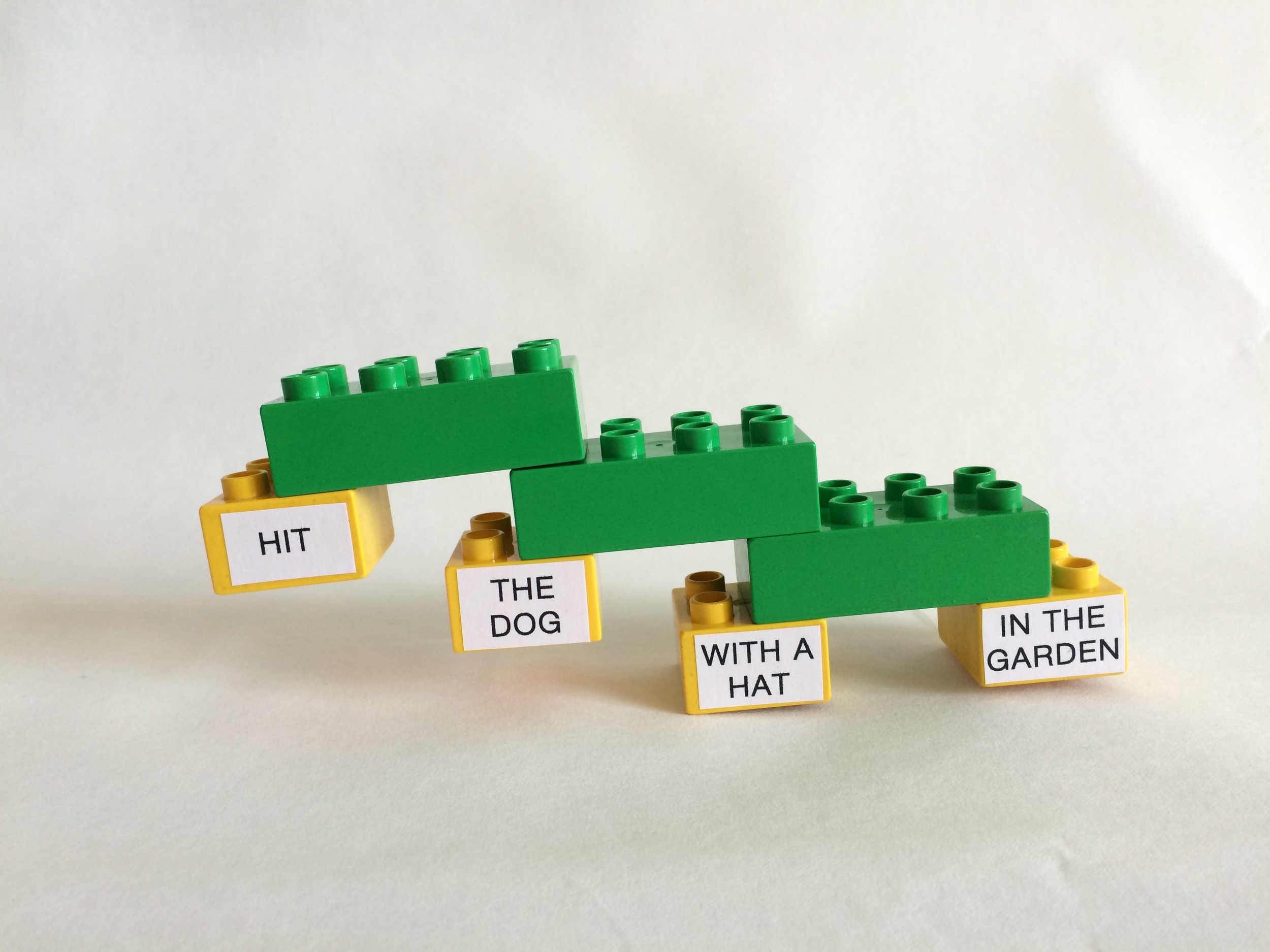

If you've read this far, chances are you're either really interested or my mom, so let my give you one final bonus round: what would have happened if you wanted to first combine the second brick with the fourth one? Well, something like this:

Or like this:

Either way, Houston, we have a bit of a construction problem: the third brick is stuck under the bridge and cannot partake in any further tower building activities. And you know what's really cool (even if it shouldn't come as a surprise by now)? Construction problems with our lego bricks correspond to interpretation problems for our sentence. You see, one thing the sentence John hit the dog with a hat in the garden cannot mean is that the object of John's hitting is the dog in the garden (the garden dog, let's say) and that the instrument he uses in that hitting activity is a hat. (Take a moment to mull that one over; you'll see I'm right.) And that is exactly the interpretation we were building in the last two pictures: by combining the dog with in the garden, we were singling out a particular dog, namely the garden dog. Once again, then, Lego and linguistics go hand in hand.

This post is long enough as it is, so I'll stop here, but I hope to have shown to at least some of you what an exciting research topic natural language can be. Next time: dark matter!